What Do Infectious Disease Experts Know, When Did They Know It?

One of the challenging things about understanding the SARS-CoV-2 pandemic is the variety of seemingly conflicting projections from infectious disease experts, and the even greater variety of definitely conflicting reformulations of those projections from political leaders.

My sometime-collaborator Philip Tetlock has made a career studying the defects of expert judgements and, more important, finding ways to extract the actual expertise into useful forecasts. He’s currently exploring this with infectious disease experts and told me:

We had a very similar experience with epidemiologists: subjective probability forecasting was an alien exercise to them and they produced noisy, occasionally erratic, judgments. One solution is sharpening the disciplinary division of labor: (a) ask epidemiologists to offer explanations/models in language they are comfortable with (e.g., SIR or SEIR models); (b) ask superforecasters to translate explanations into implied probability estimates.

I unhesitatingly acknowledge that Phil and his team at the Good Judgement Project are the world experts in these matters. My career has been in gambling and financial risk management which gives faster and more streetwise solutions.

The job of a bookie is the inverse of Phil’s goal—the bookie wants to extract maximum profit from disagreements among bettors rather than to identify the area of agreement. Betting odds, the related prediction markets, have their own problems as forecasts, among other things because they can be influenced by decidedly non-expert bettors. But asking where Las Vegas would set betting odds on various pandemic outcomes if all the customers were infectious disease experts and experienced, successful gamblers is my quick approach to estimating what the experts know. It’s less elegant than Phil’s research, but it gives faster results.

Financial risk management is also relevant here. As chief risk officer, I had to decide which trades and strategies to approve, in what size, with what limits and restrictions. I had information from world experts in both the underlying economics and successful trading. But the information was not all consistent. The subject matter experts often lacked trading skills, and disagreed among themselves. The traders had good knowledge about markets, but their understanding of the fundamentals could be deep but narrow. My job was to tease out from both groups exactly what they really knew, and combine it to make sensible risk decisions that could be sold to traders, senior management and the board.

For raw material, I took answers to a survey organized by Professor Nicholas Reich at Amherst. The first thing I noticed is the infectious disease experts in the survey are not like typical experts who opine on matters of public interest. Public experts are not rewarded for forecast accuracy. If you want your op-ed published, or to sell books, or to get interviewed on news shows; you need dramatic, confident opinions. On the other hand, to preserve your professional reputation, it doesn’t pay to stray too far from the median. Then you have incentives from professional activities, and pressure to remain consistent to previous views. All of these things get in the way of predictions people can bet on.

Infectious disease experts appear to be different. I suspect the reason is sociological. The mathematical and medical expertise needed to have useful opinions about pandemics are highly rewarded in other fields—not so much in the study of infectious disease. Therefore, the people in the field turned down more lucrative activities, likely out of either intellectual interest or public spirit. This makes them more independent-minded than people likely to climb the ladder of success in finance, economics or politics.

Independent-minded is good in that it brings a wider variety of views to the table, but it means we must do post-processing to decompose uncertainty into disagreement among experts versus randomness that none of the experts can predict.

I began by converting the confidence intervals in the chart to complete subjective probability distributions. This goes beyond what the expert respondents submitted, so it should not be taken as representing their views, but my views based on seeing their views. I focused on the number of CoVID-19 deaths in the US in 2020.

If you don’t care about the math, you can skip this and the next paragraph. I assumed the logarithm of deaths has a double exponential distribution with median on the best estimate (B), 10th-percentile on the low estimate (L) and 90th-percentile on the high estimate (H).

This means that the probability the number of deaths is less than c is:

c < B, 0.5 x 0.2[ln(B) – ln(c)]/[ln(B) – ln(L)]

c > B, 1 – 0.5 x 0.2[ln(c) – ln(B)]/[ln(H) – ln(B)]

Next, I assumed that the experts were all Kelly bettors. This is highly unlikely to be true, but we know Kelly gives consistent betting decisions, that are optimal under assumptions that are pretty reasonable for many repeated bounded bets.

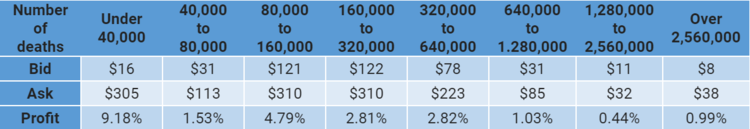

Then I asked how a bookmaker would set odds to extract the maximum profit from my Kelly-betting, double-exponential, versions of Professor Reich’s experts. The table below shows the results in the cost for a payout of $1,000. I used power-of-two ranges starting from under 40,000 (fewer deaths than from a typical influenza season) to over 2,560,000 (worse than the 1918 pandemic).

For example, consider the first column. The bet is that there will be fewer than 40,000 CoVID-19 deaths in the US in 2020. The bookie will pay you $16 now if you agree to pay $1,000 if deaths are below $40,000. Or you can pay the bookie $305 now and the bookie will pay you $1,000 if deaths are below 40,000. This is by far the widest disagreement among my version of Reich’s experts.

Using these prices, the three most optimistic experts combine to pay the bookie $1,740 (I start them all out with $1,000 each) to buy contracts that pay $5,707 if the number of deaths in under 40,000; while seven more pessimistic experts are willing to accept only $91 to pay the $5,707 if that event occurs (eight of the experts wouldn’t bet because they estimated the probability of under 40,000 deaths to be greater than 1.6% but less than 30.5%). The bookie makes $1,740 - $91 = $1,649, which is 9.18% of the $18,000 bankroll I allowed our bettor experts. If there are under 40,000 deaths, the pessimists pay $5,707 which the bookie sends to the optimists. If there are over 40,000 deaths, no one pays anything. Either way, the bookie doesn’t care.

The $16 and $305 payouts were selected as the numbers that generate the maximum profit for the bookie. If the numbers were closer together, say $100 and $200, the bookie would collect more bets, but with a thinner spread between between them. If the spread were wider, say $5 and $500, the bookie would collect fewer bets. If the two numbers were not carefully balanced, the bookie could end up having to make net payouts if the event occurred.

To put the profit numbers in perspective, retail sports betting is generally done at around a 5% spread, while Professor Ken French at Dartmouth has estimated the costs of active management in financial markets at 0.67%. These numbers are not directly comparable to the profit numbers above, but they can be used for ballpark assessment.

When we see bookies making profits around 5% or more, as in the under 40,000 bet and the 80,000 to 160,000 bet, we’re probably looking at more disagreement than is reasonable. While there are expert sports bettors—insiders and quantitative analysts—the vast majority of retail betting is done by basically uninformed opinionated bettors. If a bookie could extract 5% or more of the expert’s wealth if they were willing to bet in the manner I construct, I’m quite confident that some practice at betting would reduce their disagreement. Perhaps some experts would prove to be more accurate than others, or perhaps the consensus would be better than any individual experts. But the disagreement could not stand up to objective reality (unfortunately, or in another sense fortunately, we cannot rerun the pandemic many times to find out).

But on the other bets, particularly the 1,256,000 to 2,512,000 bet with 0.44% profit to the bookie, the profit extraction is in the ballpark of financial markets values. Financial markets are dominated by sophisticated traders betting very large amounts of money, taking advice from the best experts. The remaining disagreements plausibly represent genuine differences of informed opinion, rather than miscalibrations by people inexperienced at betting.

I don’t buy the result for under 40,000 deaths. I think if we offered the optimists $16 today to pay $1,000 if deaths are under 40,000, they would refuse and readjust their survey answers—or perhaps they would object to my parametrization of their responses. Stating probability estimates as bets often focuses the mind and gets more reasonable answers.

The $305 number is also hard to credit. Note that the bid prices are unimodal (going steadily up to a peak, then steadily down), but the ask price for 40,000 to 80,000 is much lower than either under 40,000 or 80,000 to 160,000. It’s possible there is some reason that under 40,000 is particularly likely, but once deaths cross the 40,000 threshold they’re likely to jump beyond 80,000, but it’s unlikely. There is also a bump at the far right, over 2,560,000 is greater than 1,280,000 to 2,560,000, but that could be a reasonable belief.

However, in the other categories with spreads around 3:1 for ask to bid prices, I think the numbers are reasonable summaries of expert opinion. There is very substantial disagreement about probabilities—such as 0.8% to 3.8% of CoVID-19 being worse than the 1918 flu—but this does seem to roughly correspond with public statements from experts. Note that this disagreement does not represent the unpredictability of the pandemic—that’s shown by the wide range of outcomes with significant probability. For many highly unpredictable things experts largely agree on the probabilities. This is about differences among experts in what the probabilities are.

It’s tempting to treat the bid and ask prices above as minimum and maximum probabilities. For example, we’d like to say there’s between a 0.8% and 3.8% of a higher overall mortality event than the 1918 flu. But 3.8% is not the maximum probability, my constructions of 5 of the 18 experts think the probability is higher than that, enough higher that they’d bet an average of 4% of their wealth to back their judgment. Another ten think the probability is lower than 0.8%, and will bet 0.4% of their wealth on that.

But for a non-expert, disagreement among experts is just as real a source of uncertainty as the inherent unpredictability of the pandemic. Therefore, a non-expert supporting policies based on probabilities under 0.8% or above 3.8%, is implicitly making a claim of more expertise than the experts. Looking at it another way, if all society were made up of infectious disease experts like the ones in the survey, you could always find a superior policy to those, one that everyone would prefer (“Pareto” superior in economist terms).

It’s sobering to see how much disagreement there is. The median expert has a 10:1 ratio between the high estimate of deaths and the low—such as 1.5 million to 150,000. But there is a 16:1 ratio between the best estimate of the most pessimistic and most optimistic expert. So while there’s a lot we don’t know, there’s a lot more that experts think they know, but at least some don’t.

That makes it imprudent to set policy based on any one expert or institution that employs experts. Using the average of expert opinions is only slightly better. Unless we think we can identify which experts are the most trustworthy, it makes more sense to look for policies that are sensible under the wide range of expert beliefs. Not ones that satisfy every expert, but ones that are at least consistent with both bid and ask prices above.

It’s hard to deal with highly uncertain situations with some extreme potential outcomes. Not just pandemics but long-term environmental issues, wars, natural disaster planning and others. Expert judgements can help, but it’s important to analyze them. There are three sources of uncertainty: the uncertainty individual experts predict, the disagreement among the experts and the uncertainty if all the experts are wrong. The first two we can quantify, and that’s what I tried to do here. The last could only be addressed by someone more expert than the experts.

Specific quantitative estimates of the two sources of uncertainty we can measure may seem like small use in making hard policy choices. But I think they’re much better than relying on individual experts or averages of experts—and far, far better than ignoring experts.